Synadia Connectors: Outlets

So you’ve got a NATS system humming along, streaming data at lightning speed to various parts of your infrastructure. Now you’re thinking: how do I get this data flowing into my external systems?

Welcome to the world of outlets—your gateway for getting NATS data wherever it needs to go. In this guide, we’ll explore what outlets are, how they work, and walk through three practical examples that will turn your NATS streams into powerful data pipelines.

What Are NATS Outlets?

Outlets are connectors that send messages from NATS to external systems. Think of them as the exit points for data flowing out of your NATS infrastructure to databases, storage systems, webhooks, and more.

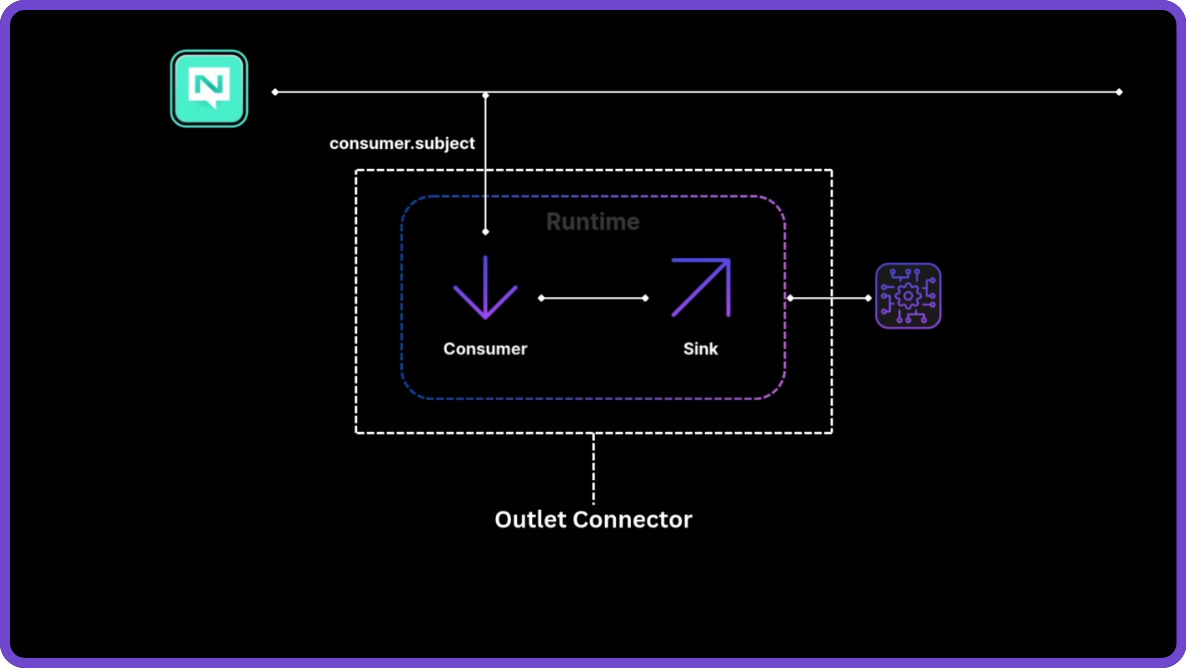

How Outlets Work

When you create an outlet, it runs within a lightweight runtime environment that manages your data pipeline. This runtime contains two key components:

1. The Consumer

The consumer seamlessly integrates with your existing NATS infrastructure, reading data through either NATS Core or JetStream. It monitors whatever subject you specify, capturing messages using standard NATS behavior.

2. The Sink

Once the consumer captures messages, the sink handles the routing and delivery to your target destination—whether that’s a database, webhook, or another messaging service.

Together, these components create a seamless pipeline: NATS subjects → consumer → sink → external system.

Three Practical Outlet Examples

Let’s explore three real-world scenarios that demonstrate how outlets can integrate NATS with your external systems.

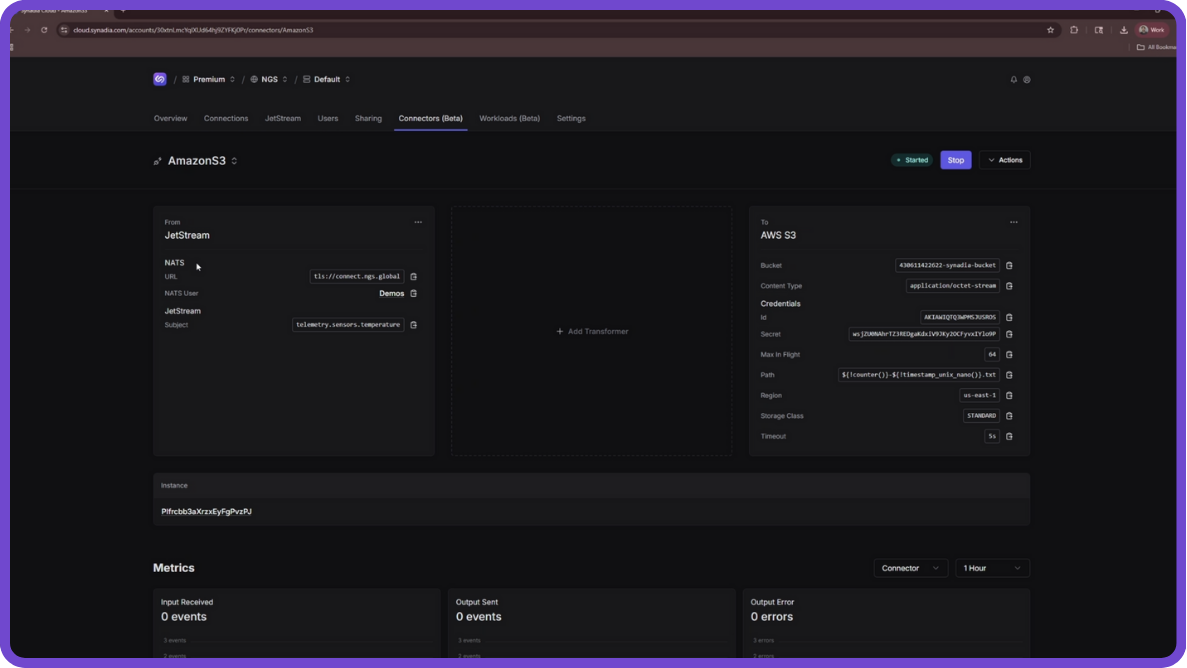

1. Amazon S3 Outlet: Long-Term Data Storage

Use Case: You’re building an application that generates logs, user events, or telemetry data, and you need long-term storage for compliance or analytics.

Why S3? Cost-effective, durable storage that scales with your application.

How It Works:

- The consumer monitors your NATS subject for messages

- The sink automatically streams data from your NATS feeds directly into S3 buckets

- Messages are stored as individual files with configurable paths and naming

Setup Highlights:

- Create an S3 bucket in your AWS console

- Generate access credentials for the bucket

- On the Synadia platform, select the Amazon S3 sink connector

- Configure your consumer (JetStream or NATS Core) and subject

- Add your bucket name, region, and credentials

- Configure file paths (use counters and timestamps for organization)

- Select content type (octet-stream for raw NATS messages)

The result? Automatic, reliable archiving of your data streams with no custom integration code.

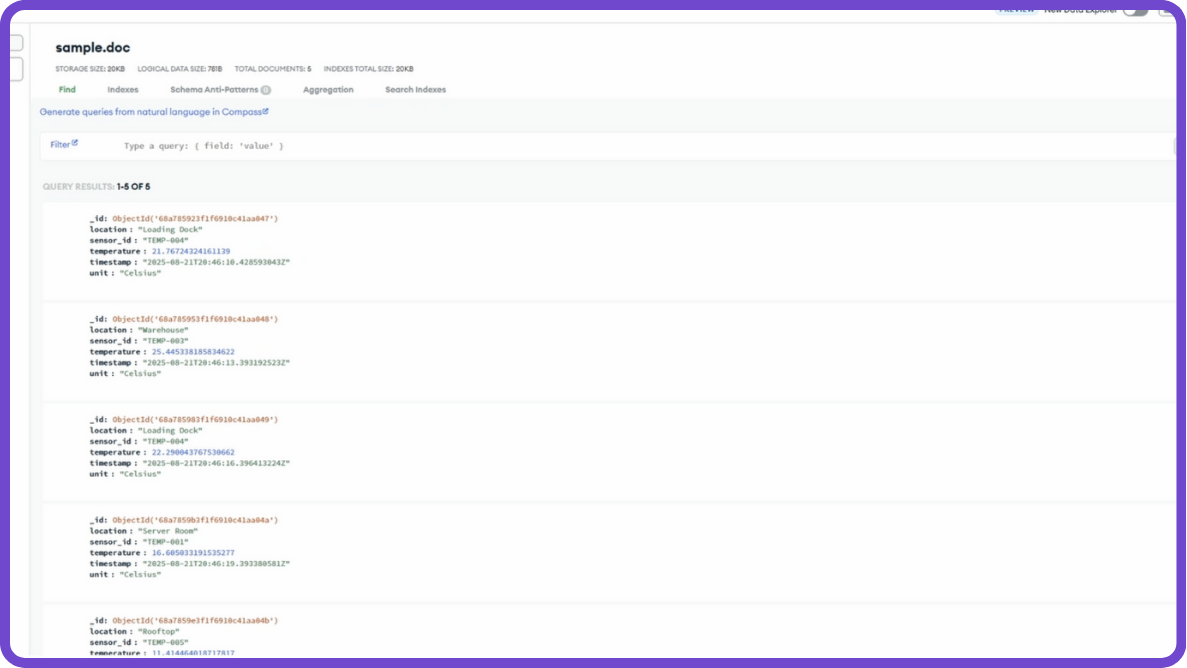

2. MongoDB Outlet: Real-Time Operational Data

Use Case: You want to monitor equipment performance in your manufacturing facility, giving your operations team real-time visibility into machine health and maintenance needs.

Why MongoDB? Perfect for operational queries and real-time data analysis with flexible document storage.

How It Works:

- The consumer reads messages from your NATS subject

- The sink transforms messages into MongoDB documents

- Data flows directly into your specified database and collection

Setup Highlights:

- Connect to your MongoDB cluster

- Create a database and collection

- Generate a connection string with password

- On the Synadia platform, select the MongoDB sink connector

- Configure your consumer (NATS Core or JetStream) and subject

- Add your connection string, database, and collection names

- Configure document mapping using Bloblang (e.g.,

root = thisto store entire messages)

Note: Bloblang is a data transformation language. For basic use cases, root = this simply maps each message to a MongoDB document. Future tutorials will cover advanced transformations and filtering.

3. HTTP Outlet: Real-Time Webhook Notifications

Use Case: You have a sensor monitoring system tracking temperature data. When a sensor detects a critical reading, you want to notify your team immediately.

Why HTTP? Direct integration with webhooks and external APIs for instant notifications and real-time responses.

How It Works:

- The consumer monitors your NATS subject for sensor data

- The sink posts messages directly to your webhook endpoint

- External systems receive real-time updates as events occur

Setup Highlights:

- Set up your HTTP endpoint (tools like Ngrok make testing easy)

- On the Synadia platform, select the HTTP sink connector

- Configure your consumer and subject

- Add your endpoint URL

- Configure authentication if needed

The result? A real-time pipeline from NATS to HTTP, creating instant notifications and triggering automated responses in external systems.

Key Takeaways

Focus on Your Logic, Not Integration Plumbing

Outlets handle all the connection management, retries, and formatting automatically, so you can focus on your application logic instead of building custom integration code.

Consistent Architecture

Whether you’re sending to object storage, databases, or webhooks, the outlet architecture remains consistent: consumer reads from NATS, sink writes to external system.

Production-Ready

Every outlet comes with built-in monitoring, logging, and metrics—making it easy to track connector health and troubleshoot issues at a glance.

Many Sinks Out of the Box

NATS provides numerous pre-built sink connectors, covering common integration scenarios without requiring custom development.

What’s Next?

Now that you understand outlets, explore inlets—the flip side of the connector story. Inlets bring data from external systems into your NATS streams, completing the data flow cycle.

Together, inlets and outlets give you bidirectional data flow between NATS and the rest of your infrastructure. Stay tuned for more tutorials in the connector series, including deep dives into transformers and advanced connector configurations!