Synadia Connectors: Inlets

When you have data scattered across various external systems—APIs, databases, file stores—bringing it all into your NATS ecosystem can seem daunting. That’s where inlets come in: your data ingestion powerhouse for feeding NATS streams.

In this guide, we’ll explore what inlets are, how they work under the hood, and walk through three practical examples that will transform you into a data ingestion wizard.

What Are NATS Inlets?

Inlets are connectors that pull messages from external systems into NATS. Think of them as the entry points for data flowing into your NATS infrastructure from the outside world.

How Inlets Work

When you create an inlet, it runs within a lightweight runtime environment that manages your data pipeline. This runtime contains two key components:

1. The Source

The source seamlessly integrates with your external systems, reading data from databases, webhooks, message queues, or any other data source you need to connect. It monitors your external system continuously, pulling in data according to your configuration.

2. The Producer

Once the source captures data, the producer handles formatting and delivery. It publishes messages to your specified NATS subjects or streams, getting the data into your NATS infrastructure—whether that’s NATS Core or JetStream.

Together, these components create a seamless pipeline: external system → source → producer → NATS subjects.

Three Practical Inlet Examples

Let’s dive into three real-world scenarios that demonstrate the power and versatility of NATS inlets.

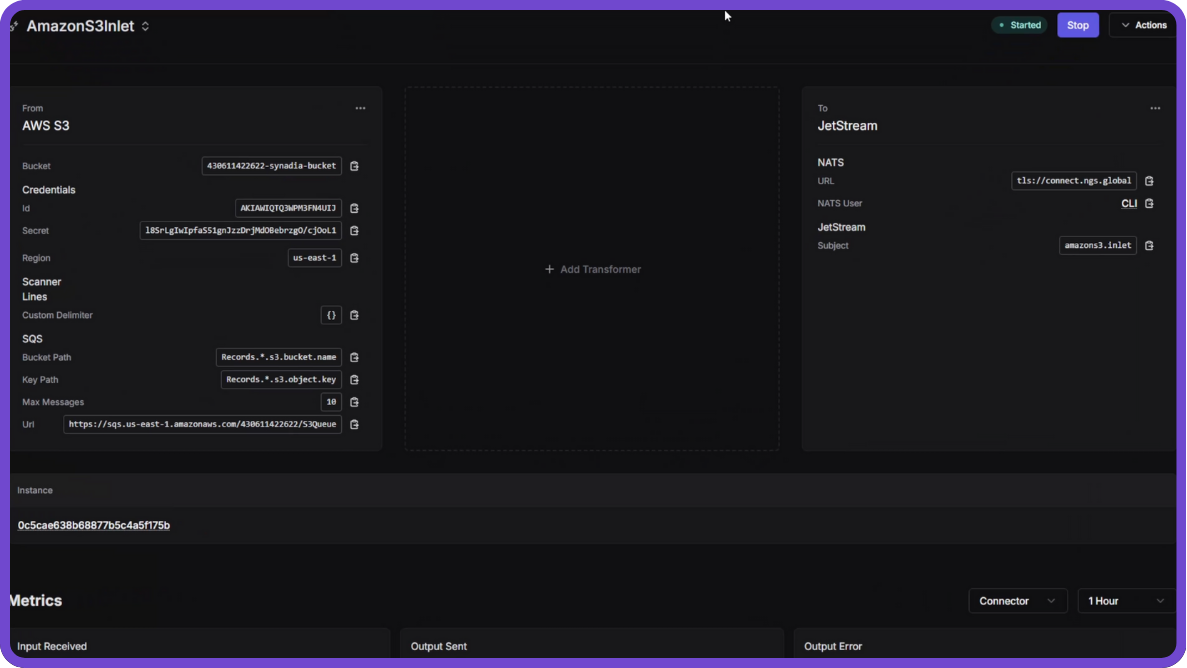

1. Amazon S3 Inlet: Automated File Processing

Use Case: You have a data processing system that needs to handle batch files. When new files are uploaded to S3 storage, you want to process them automatically.

How It Works:

- Configure an SQS queue to receive S3 event notifications

- Set up S3 bucket event notifications to trigger on new file uploads

- Create an inlet that monitors the SQS queue

- When a new file arrives, the inlet downloads it, parses the content, and publishes to NATS

Setup Highlights:

- Create an SQS queue with appropriate access policies

- Configure S3 bucket event notifications (filter by file type if needed)

- On the Synadia platform, select the Amazon S3 source connector

- Configure your bucket, region, and credentials

- Select a scanner type (e.g., “lines” for text files)

- Add your SQS URL and specify your NATS stream subject

The result? Automatic, seamless batch processing without manual intervention.

2. MongoDB Inlet: Real-Time Database Events

Use Case: Build reactive applications that immediately respond to database changes—perfect for cache invalidation, live dashboards, and real-time synchronization.

How It Works: MongoDB Change Streams provide a continuous feed of database modifications. The inlet taps into these change streams and routes events directly to NATS, giving you real-time awareness of all database activity.

Setup Highlights:

- Grab your MongoDB connection URL

- On the Synadia platform, select the MongoDB Change Stream source

- Add your connection string

- Specify your database and collection

- Configure your JetStream subject

Note: MongoDB change stream events include metadata about the operation, along with your actual document data. For cleaner data, you can use transformers (covered in future tutorials) to extract just what you need.

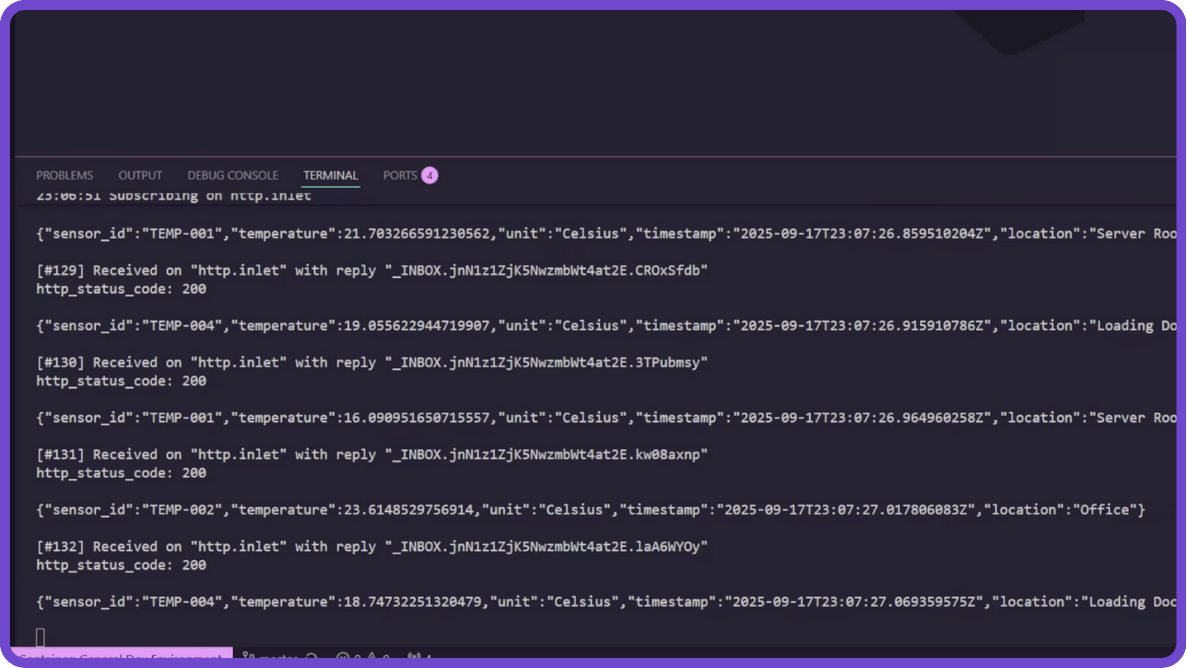

3. HTTP Inlet: Webhook Integration

Use Case: Receive data from external APIs or webhook notifications. When events occur in third-party services, capture and process that data immediately.

How It Works: Set up an HTTP endpoint that external services can call. The inlet continuously polls this endpoint, capturing responses and routing them into your NATS infrastructure.

Setup Highlights:

- Create your HTTP server endpoint (tools like Ngrok make it accessible from anywhere)

- On the Synadia platform, select the HTTP source connector

- Add your endpoint URL

- Configure HTTP method (GET, POST, etc.)

- Choose NATS Core or JetStream and specify your subject

The result? Real-time integration with external services and immediate event processing.

Key Takeaways

Focus on Your Data, Not Integration Code

Inlets handle connection mechanics and ingestion logistics automatically, so you can focus on processing your data instead of building custom integration code.

Flexible Architecture

Whether you’re pulling from object storage, databases, or webhooks, the inlet architecture remains consistent: source monitors external system, producer publishes to NATS.

Production-Ready

Inlets come with built-in monitoring, logging, and metrics—helping you track connector health and troubleshoot issues quickly.

Many Sources Out of the Box

NATS provides numerous pre-built source connectors, covering common integration scenarios without requiring custom development.

What’s Next?

Now that you understand inlets, the next piece of the puzzle is transformers—the data processing component that lets you transform, enrich, and manipulate messages as they flow through NATS. Stay tuned for the next tutorial in this connector series!