Atomic Batch Publishing in NATS 2.12: All-or-Nothing Message Guarantees

NATS 2.12 introduces a powerful new feature called atomic batch publishing. If you’re building event-sourced systems or need to fan out messages to multiple services with consistency guarantees, this one’s for you.

Watch the video overview: Atomic Batch Publishing in NATS 2.12

The Problem with Partial Writes

Without atomic batching, each publish operation is independent. Imagine you need to write five related messages and your connection drops after the third. You’re left with a partial write—your data is inconsistent, and your system is in an undefined state.

Atomic batch publishing solves this by giving you all-or-nothing guarantees: either every message in your batch commits together, or none of them do.

What This Unlocks

Avoiding partial writes with atomic batching is the foundation for several important patterns:

Consistent multi-consumer fan-out. When multiple services consume from the same stream, they all see the exact same set of messages in the same order. No service sees a partial batch while another sees the full batch.

Idempotent full-state replacement. Instead of publishing deltas, you can publish complete state and let consumers replace what they have. When your full state spans multiple messages, atomic batching ensures consumers never see a half-written snapshot.

Snapshot + delta bootstrapping. New consumers can load a point-in-time snapshot and then apply only the events that came after. Without atomic batching, a snapshot written as multiple messages could be incomplete when read.

Multi-event commits. A single logical operation often produces multiple events. For example, an order might emit OrderCreated, InventoryReserved, and PaymentInitiated. Consumers should see all three or none—not a partially committed transaction.

How It Works

Atomic batch publishing is a stream configuration option that lets you atomically publish multiple messages into a JetStream stream.

Here’s what happens under the hood:

- Staging: When you start a batch, messages are staged invisibly on the server. Consumers don’t see anything yet.

- Sequencing: Each message gets a batch sequence number, and your messages sit in a staging area waiting.

- Commit: On your last message, you include a commit header. The server then commits everything atomically—all messages appear at once.

The Batch Headers

The server tracks batches using three headers:

| Header | Purpose |

|---|---|

NATS-Batch-ID | A unique identifier for your batch (max 64 characters) |

NATS-Batch-Sequence | An incrementing number for each message in the batch |

NATS-Batch-Commit | Tells the server to commit all staged messages |

Validation Rules

Your batch will be rejected if:

- The batch ID exceeds 64 characters

- There are gaps in your sequence numbers

- The stream uses async persist mode (which can’t guarantee atomicity)

- Duplicate messages exist in the batch (as of 2.12.1)

Optimistic Locking with Expected Sequence Headers

For scenarios where you need to guard against concurrent writes, atomic batching supports optional consistency checks:

-

NATS-Expected-Last-Sequence: Only commit this batch if the stream’s last sequence matches this number. If someone else published while you were building your batch, the sequence moved, your batch fails, and you retry with fresh data. This header is only allowed on the first message of a batch. -

NATS-Expected-Last-Subject-Sequence: Same concept, but scoped to a specific subject.

Limits

The defaults are sensible, but all are configurable in your server configuration:

- 1,000 messages per batch

- 50 batches in flight per stream

- 1,000 batches total per server

- 10 seconds of inactivity before a batch is abandoned

One important constraint: batches must go into a single stream—they can’t cross streams.

More questions about how atomic batch publishing is implemented? Review the full design here.

Use Cases

Two common scenarios where atomic batch publishing shines:

-

Event Sourcing: When a single command produces multiple events that must be written together to maintain consistency. Your event history stays coherent.

-

Batch Telemetry / Fan-out: When you need to send related messages to multiple services atomically. Either every service gets their message, or none of them do.

Getting Started

Creating an Atomic-Enabled Stream

First, create a stream with the allow-batch flag:

nats stream add orders --subjects="orders.>" --allow-batchCLI Example: Single Subject

Subscribe to your stream, then publish atomically:

# Terminal 1: Subscribenats sub "orders.>"

# Terminal 2: Publish atomicallynats pub orders.events --send-on-newline --atomic> order_created> payment_received> order_shipped> ^D # Ctrl+D to commitAll three messages appear to the subscriber simultaneously when you send the commit signal.

CLI Example: Multiple Subjects

For fan-out scenarios, use manual headers to publish to different subjects in one atomic batch:

# Set a batch IDBATCH_ID=$(uuidgen)

# Publish to different subjects with batch headersnats pub orders.warehouse "ship item" \ --header "NATS-Batch-ID:$BATCH_ID" \ --header "NATS-Batch-Sequence:1"

nats pub orders.payment "charge card" \ --header "NATS-Batch-ID:$BATCH_ID" \ --header "NATS-Batch-Sequence:2"

nats pub orders.notification "send email" \ --header "NATS-Batch-ID:$BATCH_ID" \ --header "NATS-Batch-Sequence:3" \ --header "NATS-Batch-Commit:true"All three subscribers receive their message at the same time. If any message would have failed, none would have been delivered.

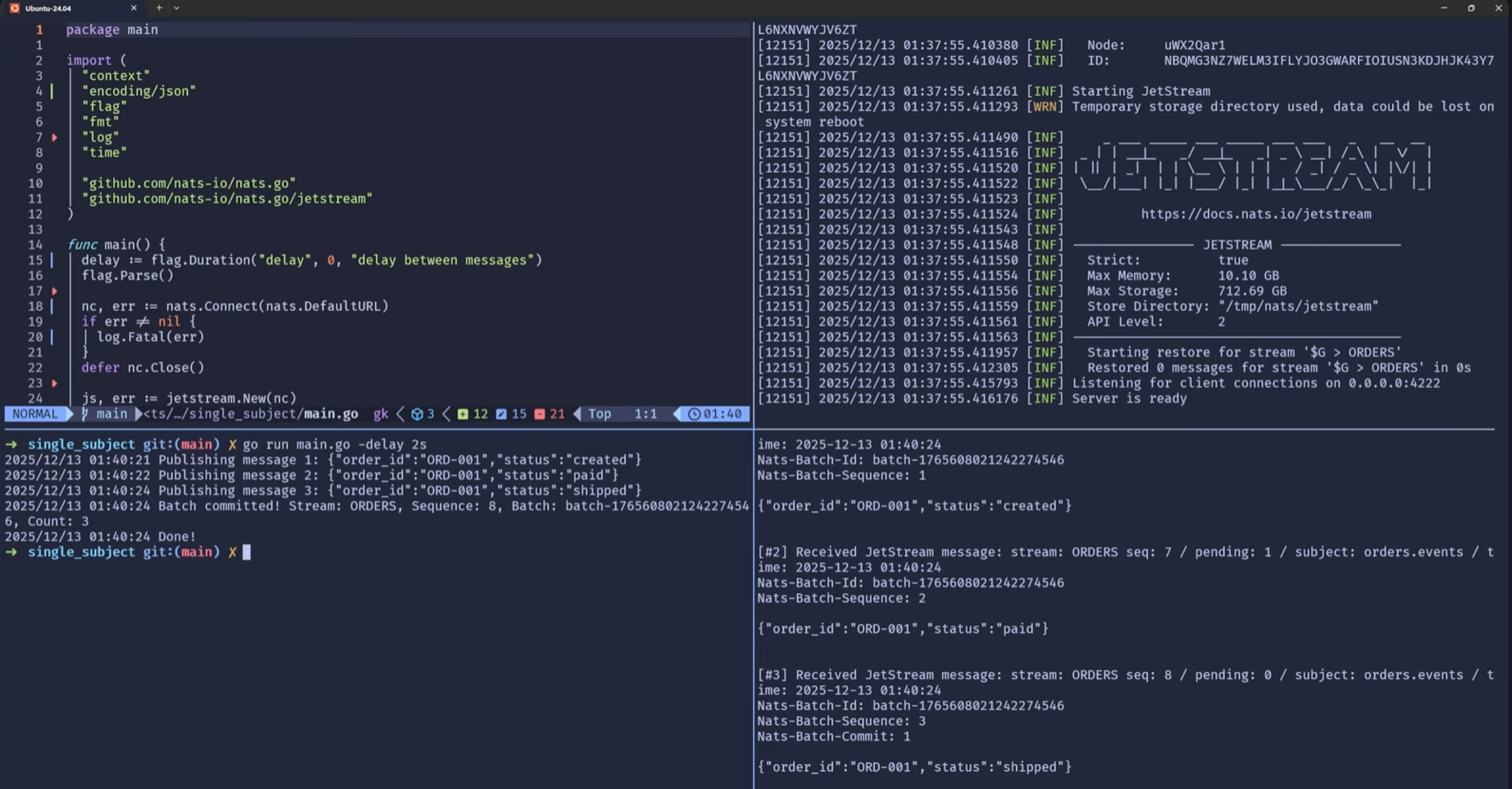

Go Code Example

Here’s how to publish an atomic batch in Go:

1func runBatch(js jetstream.JetStream) error {2 messages := []string{"order_created", "payment_received", "order_shipped"}3 batchID := uuid.New().String()4

5 for i, msg := range messages {6 m := nats.NewMsg("orders.events")7 m.Data = []byte(msg)8

9 // Set batch headers10 m.Header.Set("NATS-Batch-ID", batchID)11 m.Header.Set("NATS-Batch-Sequence", strconv.Itoa(i+1))12

13 // Set commit header on last message14 if i == len(messages)-1 {15 m.Header.Set("NATS-Batch-Commit", "true")16 }17

18 if _, err := js.PublishMsg(context.Background(), m); err != nil {19 return err20 }21 }22 return nil23}A Cleaner Approach with Orbit

Orbit is Synadia’s higher-level client library that simplifies NATS development. Its JetStream extension package handles all the batch header management for you:

1batch, err := jetstreamext.NewBatchPublisher(js)2if err != nil {3 return err4}5

6batch.Add("orders.warehouse", []byte("ship item"))7batch.Add("orders.payment", []byte("charge card"))8

9ack, err := batch.Commit(ctx, "orders.notification", []byte("send email"))10if err != nil {11 // None of the messages were published12 return fmt.Errorf("batch publish failed: %w", err)13}14// All messages published successfully!You just add messages and call commit. Orbit handles batch IDs, sequence numbers, everything. Same guarantees, much cleaner code.

Wrapping Up

Atomic batch publishing in NATS 2.12 eliminates the partial write problem that has plagued distributed systems. Whether you’re doing event sourcing or need coordinated message delivery across services, this feature gives you the consistency guarantees you need.

No more worrying about inconsistent state from failed connections mid-publish. It’s all or nothing—exactly as it should be.

We’re building the future of NATS at Synadia. Want more content like this? Subscribe to our newsletter